It permits you to prepare and check your mannequin k-times on totally different subsets of training overfitting vs underfitting in machine learning information and build up an estimate of the efficiency of a machine studying model on unseen knowledge. The drawback here is that it’s time-consuming and can’t be utilized to complex fashions, corresponding to deep neural networks. Mastering model complexity is an integral a half of constructing strong predictive fashions.

Excessive Mannequin Complexity Relative To Knowledge Measurement

Whenever the window width is big enough, the correlation coefficients are stable and do not depend upon the window width dimension anymore. Therefore, a correlation matrix can be created by calculating a coefficient of correlation between investigated variables. This matrix can be represented topologically as a complex network the place direct and oblique influences between variables are visualized.

The Significance Of Bias And Variance

The bias-variance tradeoff illustrates the connection between bias and variance in the mannequin efficiency. Ideally, you would need to choose a mannequin that both precisely captures the patterns in the training data, but also generalize well to unseen data. High-variance studying methods may be able to represent their coaching dataset well but are susceptible to overfitting to noisy or unrepresented training knowledge. In contrast, algorithms with a high bias sometimes produce less complicated models that don’t tend to overfit however might underfit their training knowledge, failing to capture the patterns in the dataset. Variance is a measure of how a lot the model’s predictions fluctuate for various coaching datasets.

What Is Underfitting In Machine Learning

In this case, that happens at 5 degrees As the flexibility will increase beyond this level, the training error will increase as a end result of the mannequin has memorized the training information and the noise. Cross-validation yielded the second greatest model on this testing data, but in the long term we count on our cross-validation mannequin to perform finest. The precise metrics rely upon the testing set, but on common, the most effective model from cross-validation will outperform all different models. Generalization relates to how effectively the concepts learned by a machine studying mannequin apply to particular examples that weren’t used throughout the training. You want to create a mannequin that can generalize as precisely as potential.

Generalization In Machine Studying

For instance, making use of transformations corresponding to translation, flipping, and rotation to input pictures. A statistical model is claimed to be overfitted when the mannequin doesn’t make correct predictions on testing data. When a mannequin will get skilled with so much knowledge, it begins learning from the noise and inaccurate information entries in our knowledge set. Then the model doesn’t categorize the info appropriately, due to too many particulars and noise.

Used to retailer information about the time a sync with the lms_analytics cookie happened for users within the Designated Countries. Used by Google Analytics to collect information on the number of instances a consumer has visited the website as well as dates for the primary and most up-to-date go to. I imagine u have a minor mistake within the third quote – it must be “… if the model is performing poorly…”. This free course guides you on constructing LLM apps, mastering immediate engineering, and growing chatbots with enterprise information. She is purely thinking about studying the key ideas and the problem-solving strategy within the math class rather than just memorizing the options offered. He is the most aggressive pupil who focuses on memorizing each query being taught in class as an alternative of specializing in the key ideas.

The bias is mostly low for complicated fashions like timber, whereas the bias is critical for simple models like linear classifiers. The variance indicates the degree of prediction fluctuation the trained algorithm may need relying on the data it was trained on. In other words, the variance characterizes the sensitivity of an algorithm to modifications in the information. As a rule, easy fashions have a low variance,and sophisticated algorithms – a excessive one. To perceive the accuracy of machine studying fashions, it’s important to test for mannequin health. K-fold cross-validation is certainly one of the most popular methods to evaluate accuracy of the model.

In such instances, the training course of can be stopped early to prevent additional overfitting. Overfitting and underfitting is a fundamental problem that trips up even skilled information analysts. In my lab, I really have seen many grad college students match a model with extraordinarily low error to their knowledge and then eagerly write a paper with the outcomes.

In other words, the mannequin shows a high ML metric during training, however in manufacturing, the metric is significantly decrease. Our two failures to study English have made us a lot wiser and we now resolve to make use of a validation set. We use both Shakespeare’s work and the Friends show because we’ve learned more information almost all the time improves a mannequin. The difference this time is that after coaching and before we hit the streets, we consider our mannequin on a group of pals that get together each week to discuss current events in English.

However, unlike overfitting, underfitted fashions experience high bias and fewer variance inside their predictions. This illustrates the bias-variance tradeoff, which happens when as an underfitted mannequin shifted to an overfitted state. As the model learns, its bias reduces, however it may possibly increase in variance as turns into overfitted. When fitting a model, the objective is to seek out the “sweet spot” in between underfitting and overfitting, so that it might possibly establish a dominant development and apply it broadly to new datasets.

- Underfit, and your mannequin resembles a lazy, underprepared pupil, failing to grasp even the most fundamental patterns within the information.

- The cross-validation error with the underfit and overfit models is off the chart!

- The label quality metrics, together with the label consistency checks and label distribution evaluation help in discovering noise or anomalies which contribute to overfitting.

- This technique allows us to tune the hyperparameters of the neural network or machine studying mannequin and check it using fully unseen knowledge.

On the opposite hand, a low-bias, high-variance model may overfit the info, capturing the noise along with the underlying sample. It must be noted that the preliminary indicators of overfitting will not be instantly evident. When underfitting occurs, the mannequin fails to ascertain key relationships and patterns in the data, making it unable to adapt to or appropriately interpret new, unseen knowledge.

If a model has a very good coaching accuracy, it means the mannequin has low variance. You then common the scores throughout all iterations to get the final assessment of the predictive model. Diagnostics plots, such as residual plots, quantile-quantile (Q-Q) plots, and calibration plots, can present priceless insights into mannequin match. These graphical representations can help determine patterns, outliers, and deviations from the anticipated distributions, complementing the quantitative assessment of mannequin fit. The identical, when the machine is fed with parameters like a ball will always be inside a certain diameter in size, it’s going to have strains across its floor. Then, the machine wouldn’t recognize a golf ball, TT ball as balls since the diameter of those balls would be smaller.

Leave-One-Out Cross-Validation (LOOCV) is a particular case of K-Fold Cross-Validation, the place K is the same as the number of situations within the dataset. In LOOCV, the mannequin is trained on all cases except one, and the remaining occasion is used for validation. This process is repeated for each occasion within the dataset, and the efficiency metric is calculated as the common throughout all iterations. LOOCV is computationally costly but can provide a dependable estimate of model efficiency, especially for small datasets. So, what do overfitting and underfitting imply within the context of your regression model?

Generalization in machine learning is used to measure the model’s efficiency to classify unseen data samples. A mannequin is said to be generalizing nicely if it may possibly forecast knowledge samples from diversified units. How can you prevent those modeling errors from harming the performance of your model? Underfitting happens when a mannequin is simply too simplistic to understand the underlying patterns in the data. It lacks the complexity wanted to adequately symbolize the relationships current, resulting in poor efficiency on each the coaching and new knowledge. You must observe that bias and variance usually are not the only factors influencing mannequin efficiency.

Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/ — be successful, be the first!

Round Rugs

Round Rugs  Wool Rugs

Wool Rugs  Vintage Rugs

Vintage Rugs

Carpet Tiles

Carpet Tiles  Carpet

Carpet

Embossed Rug

Embossed Rug  Plain Rug

Plain Rug

2.5'*4'

2.5'*4'  2'*3'

2'*3'  3'*5'

3'*5'  5*7.5

5*7.5

Artificial Grass

Artificial Grass  Mats

Mats

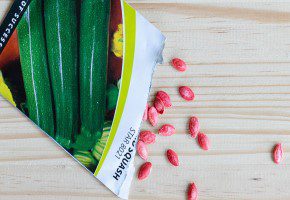

Soil

Soil  Fertilizer

Fertilizer  Pesticides

Pesticides